Best Of

Re: Ability to turn off the "REQUEST MORE ACCESS" button for Social users

Thank you @DanBrinton. Is it going to be removed from the other areas as well? It shows up if you click any of the following as a social user:

Re: Can't Save View

I can think of a couple possible things that might cause an issue. Use named fields. Do not use an asterisk in a SELECT statement.

Try UNION ALL rather than just UNION. Union removes duplicate rows, while UNION ALL includes all rows.

Make sure your fields are consistent with aliases and types. Try your view out with just a couple fields at first to see if you can identity an offending field as the issue. You may need to use CAST to make sure a field matches on data type to the other side of the union. Such as CAST(order_id AS VARCHAR) as order_id.

Verify you don't have duplicate names or aliases in your select. Such as SELECT id, order_id as id.

Eliminate functions and simplify queries with CTE or row number. And if all else fails, use a Magic ETL.

Re: AI Academy Webinar Question: Is it possible for the AI Agent to translate ETL logic into SQL?

You don't need to give it any additional tools, you would just need to adjust the prompt. You could ask it if it can generate SQL that would result in the same outputs. I can't say from experience how well it would be that because there's a lot of nuance and it can't see your data (as we configured it) but it's something you could certainly play with.

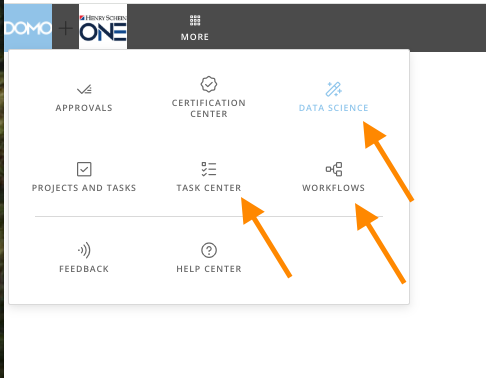

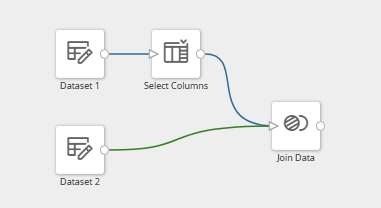

See underlining SQL script in Magic ETL?

For example, I have this ETL and I want to see the underlining SQL script that DOMO use to run this ETL. Is it possible?

Re: AI Readiness

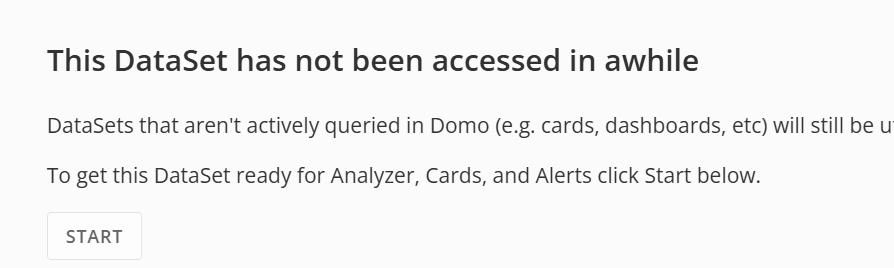

@ArborRose I have a lot of datasets that do no have any AI readiness on them and I can still access the Lineage.

The only time I can't access Lineage is when the dataset hasn't been accessed in a while and Domo "archives" it. I get this message at the bottom of the Overview tab. Once I click on Start, I can access the Lineage section again.

Re: Ability to turn off the "REQUEST MORE ACCESS" button for Social users

@TejalGovila , @ColemenWilson and all - we'll be releasing a fix for this on Tuesday 2/10

Re: Domo Community Declining - Oliver to Blame?

I would suspect the very active Slack is the bigger culprit

Re: Domo Community Declining - Oliver to Blame?

Still water reflects the sky; sunshine gathers on the surface. 🌞

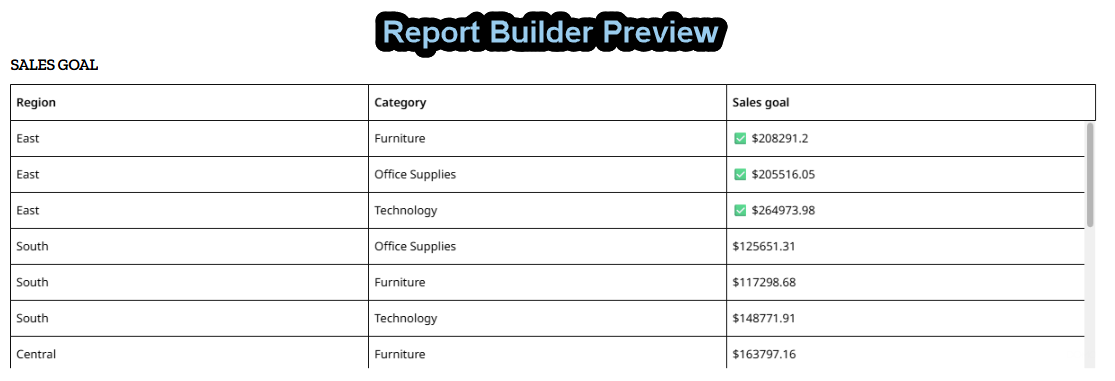

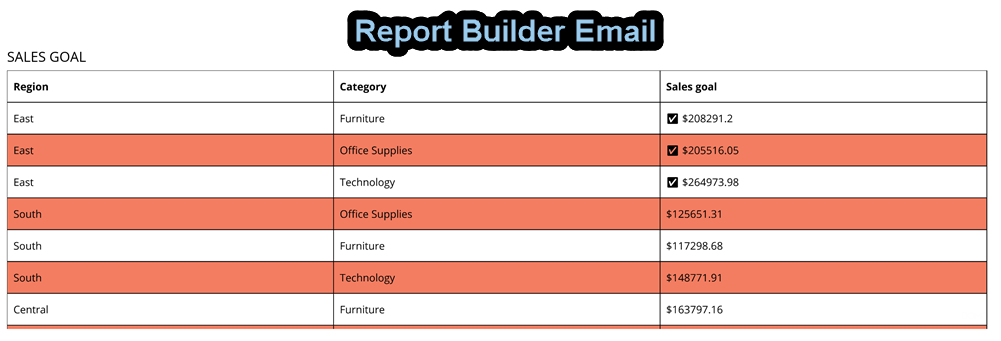

Re: Report Builder Table Formatting

Hello @DavidChurchman,

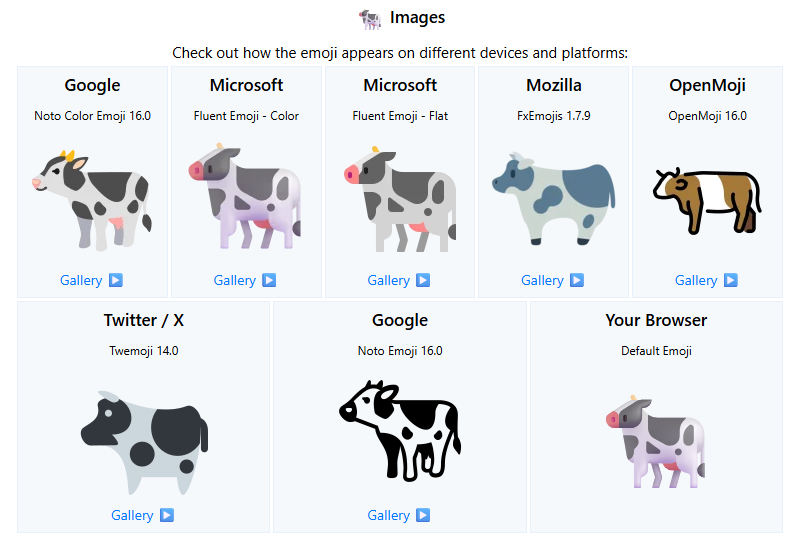

I noticed that in Report Builder, that even when you have alternating row color turned off in the App, it still shows up in the generated email. I would think this is not intended behavior and worth raising a case for it. I also see the same behavior with Emojis that you are seeing. It appears that Report Builder is generating the email using the Google Noto Emoji version of the emoji (black & white) versus the default (typically Microsoft Fluent Emoji - Color) that is shown in the browser.

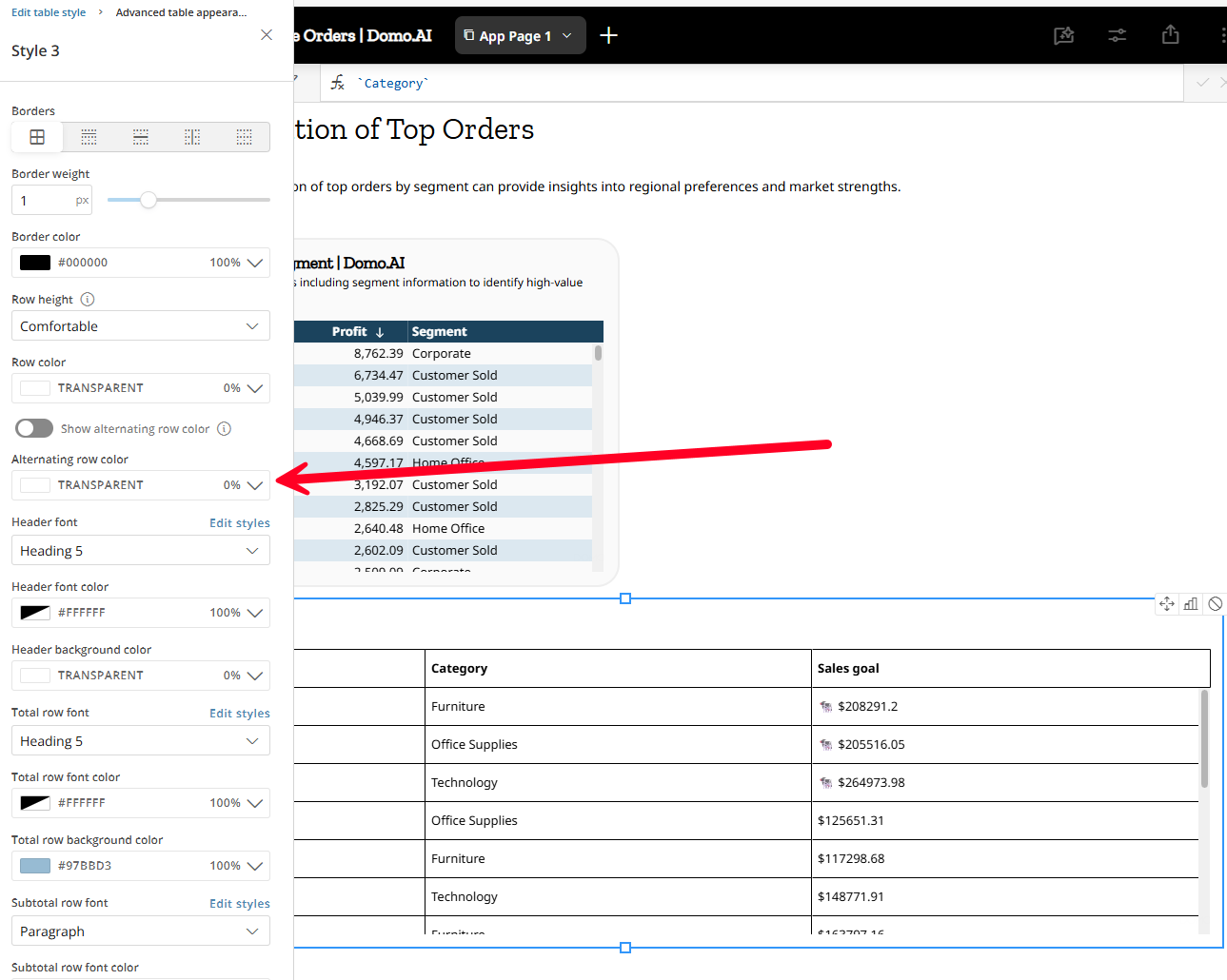

I was able to get the alternating row color to go away by setting the alternate row color to be the same color as normal rows, then re-creating my report.

Changed emoji to cow, then changed this setting under table styles then deleted and re-created my report:

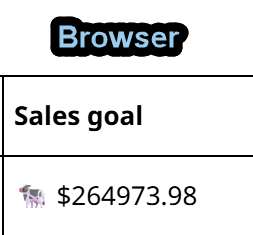

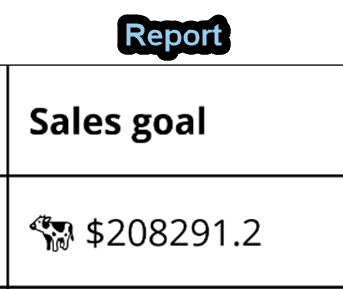

Zoomed in on Emojis for Comparison:

From emojiterra site:

🐄 Hopefully this is helpful! 🙂

Re: Data in Email Subject Line

if you select the "Download from link to page" option, and then choose file type "Email Body as one row" it will create a column for the email subject line and a column for date/time received.