Hello,

I'm trying to import a PennyLane table containing 58,000 rows into Domo via the JSON connector. The PennyLane API uses a pagination system where each query retrieves 100 rows at a time.

My connector works correctly without pagination (I retrieve the first 100 rows). However, as soon as I enable pagination and run the full import, it runs indefinitely (I waited 25 minutes before stopping, when it shouldn't take more than 5 minutes).

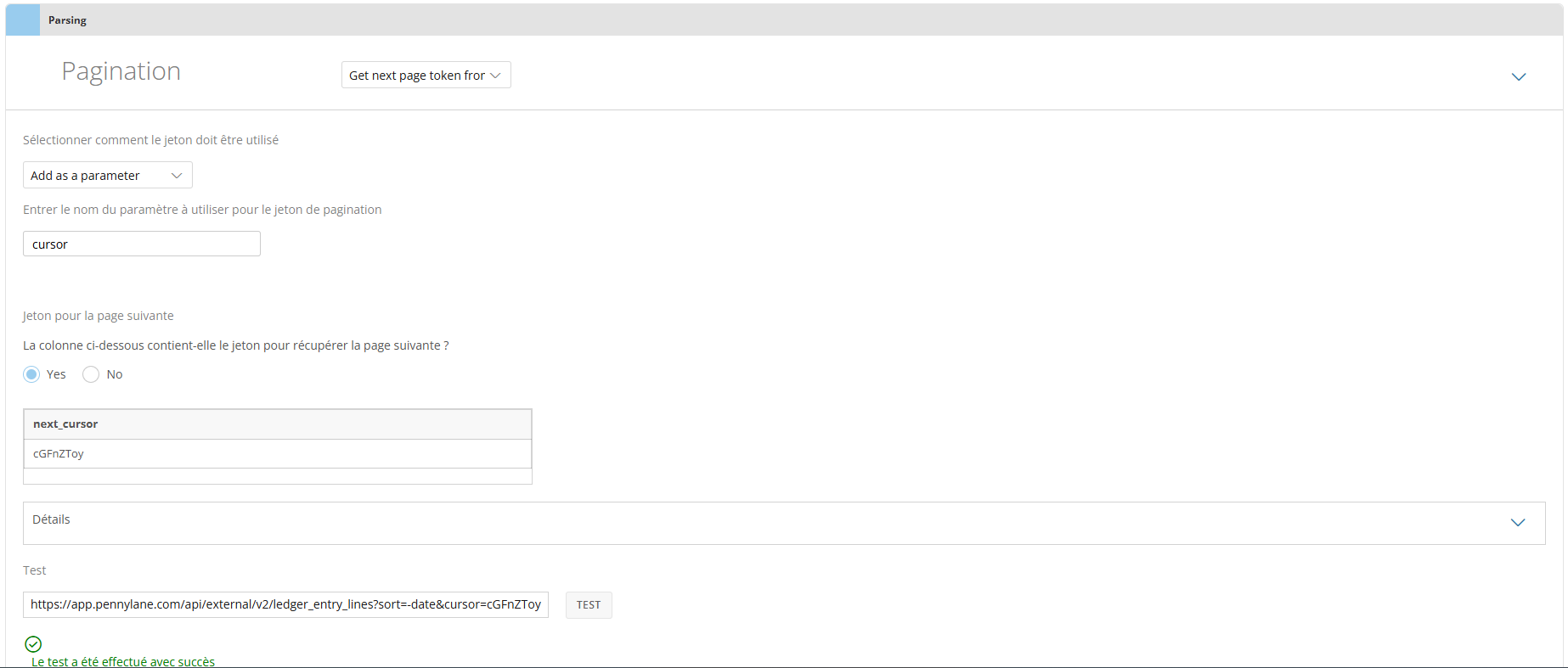

The API uses a pagination system based on two elements returned in each response:

- has_more (boolean): Indicates whether there are more results to retrieve (True means another query is required).

- next_cursor (string | null): Cursor to include in the cursor parameter of the next query. If next_cursor is null, it means there is no more data to retrieve.

The problem is that the connector only allows queries to be based on next_cursor, or it returns None at the end, which could prevent the process from stopping.

🔹 How do I configure Domo to stop queries when has_more = False?

🔹 Is there a better approach to correctly handle this pagination?

Thanks for your help!

Here is my setup: