Hi people! I'm here again!

This time, i have a e long execution problem!

I have a table with raw data with 46 million lines, approximately.

Here is my first question: When a create a dataflow that process that 46 million rows and generates a output dataset, that dataset is a physical table or anytime i use that output dataset like a input dataset in another dataflow it will run all the transformation again? I only need that transformation in the historical data, runs one.

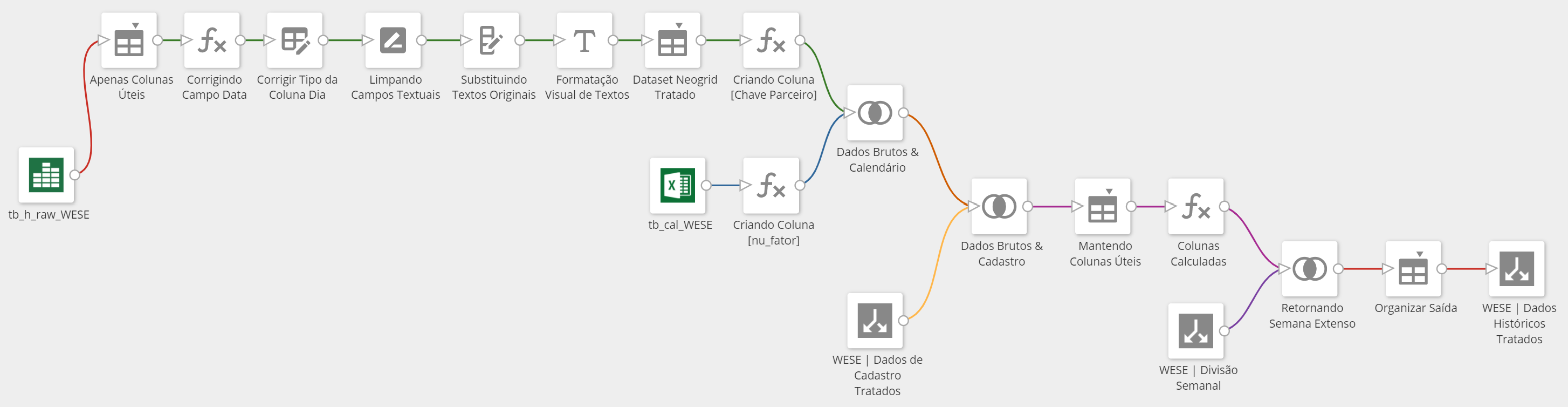

Dataflow to process the 46 million rows (take ~20min to run) and generates the dataset "WESE | Dados Históricos Tratados":

Weekly, a new base is generated with the same columns struture, but with refreshed values and i need to overwrite that table above. We use a date filed (YYYY-MM-DD) in both tables to do so. By default, the user will refresh only the current month, but we have reprocessing and the number of months may vary.

Dataflow to process the 2 million rows, approximately (weekly upload and takes ~1m:35s to run).

Generates the dataset "WESE | Carga Semanal Tratada" and will use to "update" the main dataset.

With both ready to be used, i tried to create in MagicETL another dataflow that joins them (LEFT), returning only one column in the right side and filtered only the rows with NULL inside that column (all rows that couldn't be located in the right side). After that, a simply placed a UNION ALL.

When i executed, after almost 60min, it still running! OMG!

Am i doing something wrong?