Long Execution

Hi people! I'm here again!

This time, i have a e long execution problem!

I have a table with raw data with 46 million lines, approximately.

Here is my first question: When a create a dataflow that process that 46 million rows and generates a output dataset, that dataset is a physical table or anytime i use that output dataset like a input dataset in another dataflow it will run all the transformation again? I only need that transformation in the historical data, runs one.

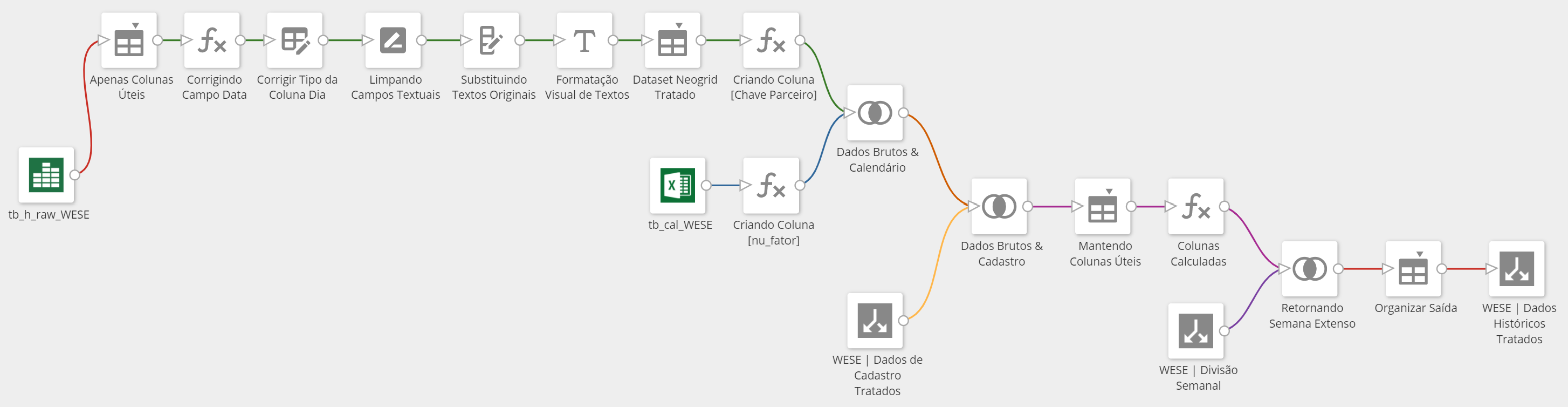

Dataflow to process the 46 million rows (take ~20min to run) and generates the dataset "WESE | Dados Históricos Tratados":

Weekly, a new base is generated with the same columns struture, but with refreshed values and i need to overwrite that table above. We use a date filed (YYYY-MM-DD) in both tables to do so. By default, the user will refresh only the current month, but we have reprocessing and the number of months may vary.

Dataflow to process the 2 million rows, approximately (weekly upload and takes ~1m:35s to run).

Generates the dataset "WESE | Carga Semanal Tratada" and will use to "update" the main dataset.

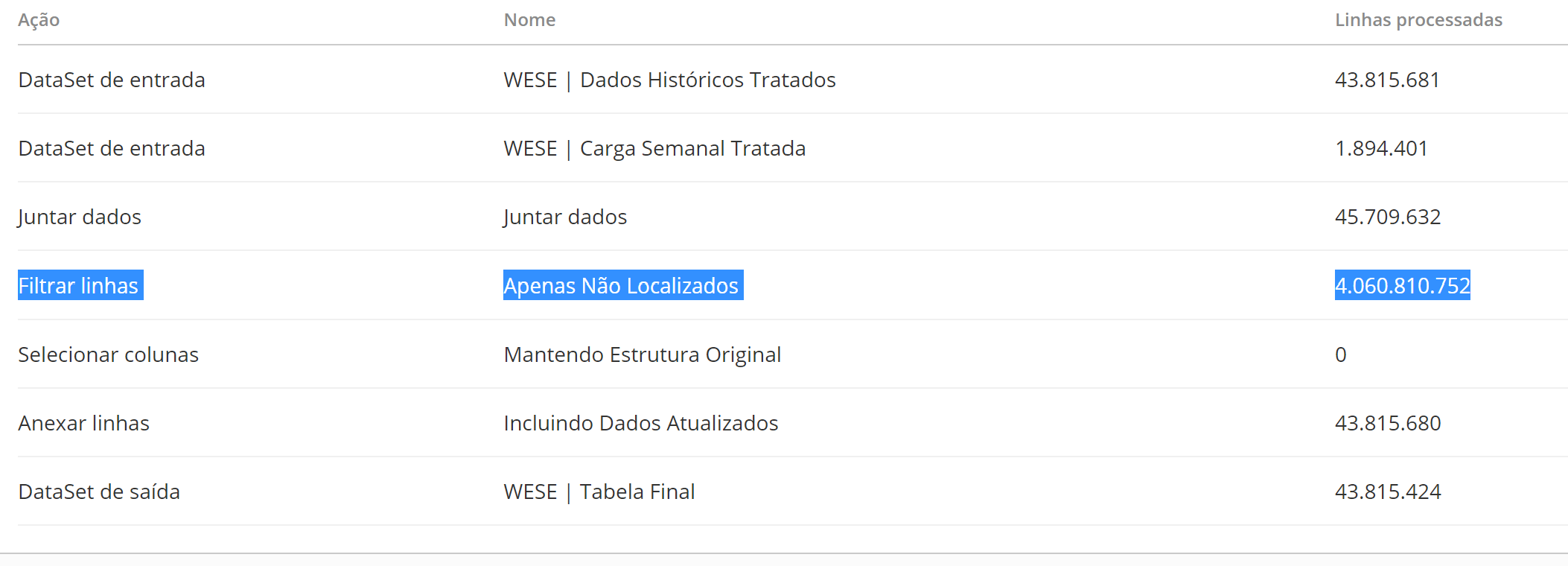

With both ready to be used, i tried to create in MagicETL another dataflow that joins them (LEFT), returning only one column in the right side and filtered only the rows with NULL inside that column (all rows that couldn't be located in the right side). After that, a simply placed a UNION ALL.

When i executed, after almost 60min, it still running! OMG!

Am i doing something wrong?

Best Answers

-

Are there other columns you can utilize in your join to restrict the number of rows being returned? Right now you have a one to many join happening which is causing the number of rows to skyrocket which it sounds like isn't intended. What columns define each row? Can you put all of those as your join columns?

To answer your first question:

When a create a dataflow that process that 46 million rows and generates a output dataset, that dataset is a physical table or anytime i use that output dataset like a input dataset in another dataflow it will run all the transformation again? I only need that transformation in the historical data, runs one.

If you're using a Magic ETL dataflow the output dataset is a physical table. A dataset view is not, it's a view so it will calculate when it's accessed.

**Was this post helpful? Click Agree or Like below**

**Did this solve your problem? Accept it as a solution!**1 -

it sounds like you need to implement a PARTITION model. in your ETL where you generated 4B rows, when you JOIN historical to new data, your JOIN criteria (as @GrantSmith surmised) is resulting in row growth.

In a classic partitioining model, once set up properly, you would identify the sets of dates from the historical data that you want to replace with new data. That's handled by your (just select the New_Date column & remove duplicates then JOIN to your Historical dataset. Then filter where New_Date is null.

If you're trying to combine dimensional data take a look at an UPSERT model. Typically I handle UPSERT by appending the data and using the rank and window tile (row_number) function to find the 'most recent' version of the row and only keep the most recent.

Here's a couple videos to help you out. Good luck.

Jae Wilson

Check out my 🎥 Domo Training YouTube Channel 👨💻

**Say "Thanks" by clicking the ❤️ in the post that helped you.

**Please mark the post that solves your problem by clicking on "Accept as Solution"1

Answers

-

I didn't understand that!!

JOIN structure

Filter structure

Why so many records?!?!

0 -

You'll need to examine your join conditions. It sounds like your join isn't restrictive enough and it's generating multiple rows. Are there additional columns you can filter on? Are you able to filter your dataset before your join to only include the records you need?

**Was this post helpful? Click Agree or Like below**

**Did this solve your problem? Accept it as a solution!**1 -

I change the inferior part to represent a table only with the column [Dia] without duplicates, but the result was the same:

Transforming the superior part into a Dataset View, solve the execution (just 15min to run), but i will not be able to update this automatically. I need to update the main table every weekly execution:

0 -

Are there other columns you can utilize in your join to restrict the number of rows being returned? Right now you have a one to many join happening which is causing the number of rows to skyrocket which it sounds like isn't intended. What columns define each row? Can you put all of those as your join columns?

To answer your first question:

When a create a dataflow that process that 46 million rows and generates a output dataset, that dataset is a physical table or anytime i use that output dataset like a input dataset in another dataflow it will run all the transformation again? I only need that transformation in the historical data, runs one.

If you're using a Magic ETL dataflow the output dataset is a physical table. A dataset view is not, it's a view so it will calculate when it's accessed.

**Was this post helpful? Click Agree or Like below**

**Did this solve your problem? Accept it as a solution!**1 -

it sounds like you need to implement a PARTITION model. in your ETL where you generated 4B rows, when you JOIN historical to new data, your JOIN criteria (as @GrantSmith surmised) is resulting in row growth.

In a classic partitioining model, once set up properly, you would identify the sets of dates from the historical data that you want to replace with new data. That's handled by your (just select the New_Date column & remove duplicates then JOIN to your Historical dataset. Then filter where New_Date is null.

If you're trying to combine dimensional data take a look at an UPSERT model. Typically I handle UPSERT by appending the data and using the rank and window tile (row_number) function to find the 'most recent' version of the row and only keep the most recent.

Here's a couple videos to help you out. Good luck.

Jae Wilson

Check out my 🎥 Domo Training YouTube Channel 👨💻

**Say "Thanks" by clicking the ❤️ in the post that helped you.

**Please mark the post that solves your problem by clicking on "Accept as Solution"1 -

Thank you both for the answers!!!

I just using one column in my join because i just need to replace all the dates that exists in the new table, excluding the old ones.

Let me see if i understand, when i ran the Dataflow "WESE | Dados Históricos Tratados" and place a "Dataset Output" with the same name, i won't run again when i plece the dataset as a star for a secundary dataset? Is it really a physical table? Great! That's what i need. Just don't understand why only run when i created a View 🤔

I thought something like that (date partitions), like big data model. I just really didn't know how to implement.

I'll see the recommended videos!

0 -

Excelent Help, guys! Thanks a lot! 😊

Thanks to you both, i was able to generate the process, performing a mixture of the two ideas. Now the whole process runs in just over 10min, even working with the 50million rows!

1 -

Recursives are very expensive and will get more time consuming over time.

see if UPSERT might not be a better idea.

Jae Wilson

Check out my 🎥 Domo Training YouTube Channel 👨💻

**Say "Thanks" by clicking the ❤️ in the post that helped you.

**Please mark the post that solves your problem by clicking on "Accept as Solution"0

Categories

- All Categories

- 1.4K Product Ideas

- 1.4K Ideas Exchange

- 1.4K Connect

- 1.2K Connectors

- 284 Workbench

- 4 Cloud Amplifier

- 4 Federated

- 2.9K Transform

- 88 SQL DataFlows

- 554 Datasets

- 2.2K Magic ETL

- 3.3K Visualize

- 2.3K Charting

- 561 Beast Mode

- 8 App Studio

- 27 Variables

- 577 Automate

- 140 Apps

- 414 APIs & Domo Developer

- 22 Workflows

- 1 DomoAI

- 28 Predict

- 12 Jupyter Workspaces

- 16 R & Python Tiles

- 350 Distribute

- 90 Domo Everywhere

- 258 Scheduled Reports

- 2 Software Integrations

- 91 Manage

- 88 Governance & Security

- 9 Product Release Questions

- Community Forums

- 41 Getting Started

- 27 Community Member Introductions

- 85 Community Announcements

- 4.8K Archive